After many years, AI has made it possible to develop and deploy application in days.

With just a couple of tools, API development was streamlined; you can design, test, and deploy if you have the right set of tools. A solo developer or a team could develop an application and backend without breaking a sweat.

What started out as revolutionary has now created its own set of problems.

Only a fraction of people are able to deploy on time and maintain upkeep.

When we are creating and developing APIs much faster than we did, designing alone isn’t enough. Instead, we are avoiding the work we must put in testing them, the visibility needed to see how APIs perform in actual traffic.

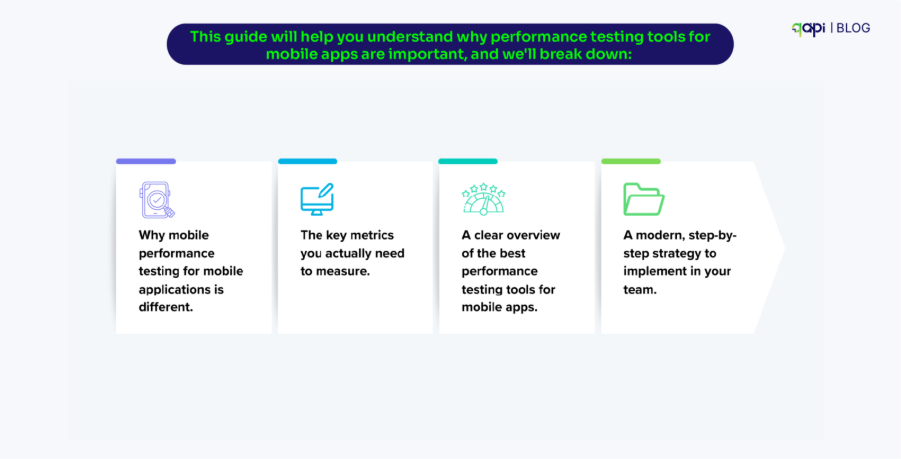

API testing is non-negotiable in 2026.

What is wrong with the way people are testing their APIs?

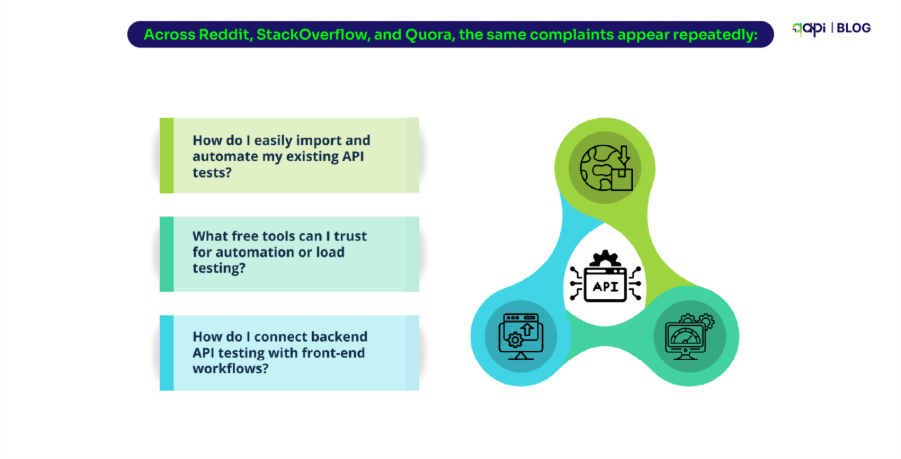

If you visit Reddit or StackOverflow, there’s a massive drop in the questions we’ve asked around API testing and effective ways to do it. For example, here’s a user asking a basic question.

I agree with people’s thoughts presented in such forums, because there’s no clear cut or right step-by-step approach to API testing.

How does one know what’s the best tool or the best API testing method is? And how does one develop and replicate that practice?

So, here’s this blog post to make it simple.

API testing manual or automated?

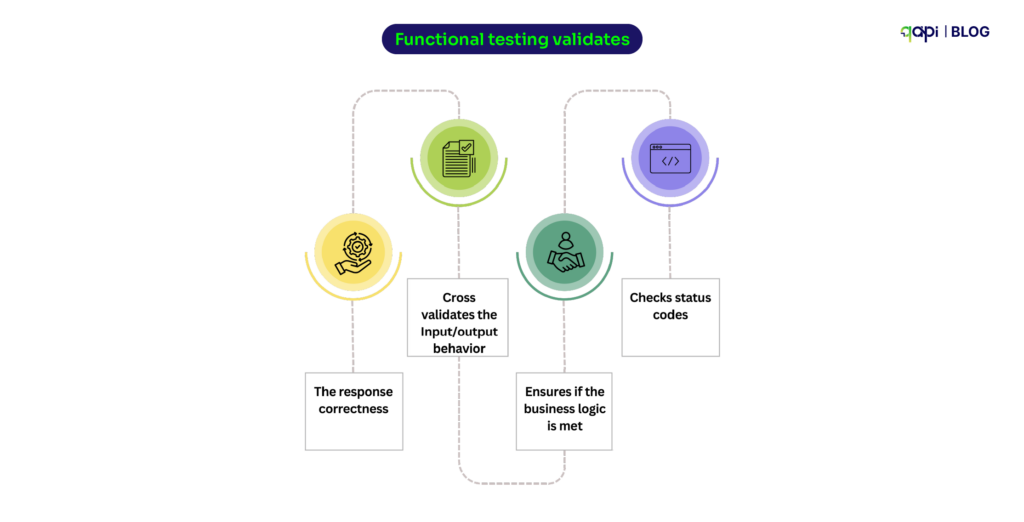

First things first, testing is just not about doing functionality checks.

The problem is that we don’t test in ways that scale.

Either the teams are all in with manual testing practices, running in circles and are already exhausted. Or they are spending time rechecking or validating what their automated testing tool missed.

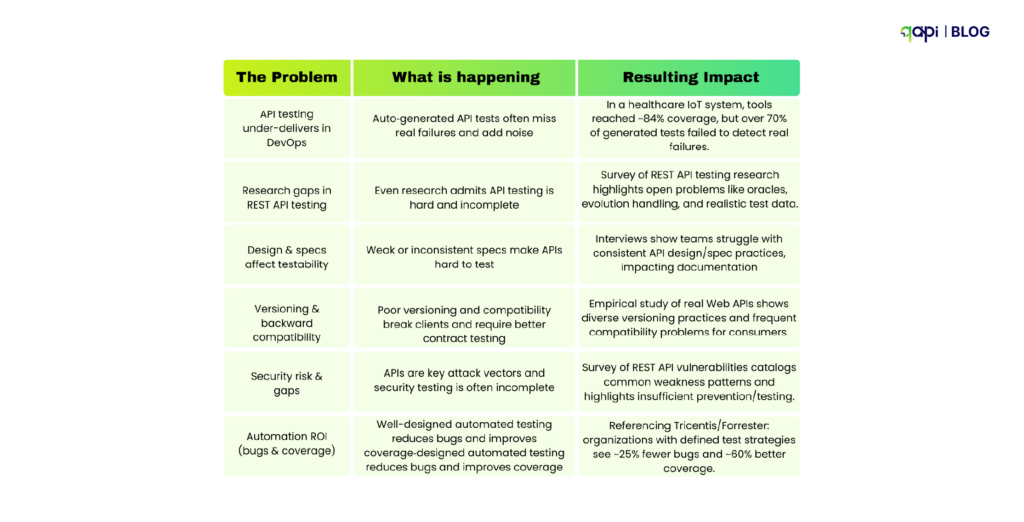

The first thing that we already talked about is flaky tests, which reduces confidence in the entire CI/CD process. These tests pass intermittently—often succeeding on reruns without changes—due to race conditions, shared or unstable test data, inconsistent environments, or unreliable external dependencies.

The result is clogged pipelines, delayed deployments, and a growing tendency for engineers to ignore legitimate failures.

The second bottleneck is excessive test maintenance. Teams often spend 40-60% of their QA time simply repairing broken tests. This occurs when tests are basic: they rely on hardcoded data, make overly precise assertions, are tightly coupled to implementation details, or use expired fixtures.

Even a minor change in the application can thus trigger widespread test failures, slowing down release cycles and accruing significant technical debt.

A snowball effect, for all the wrong reasons.

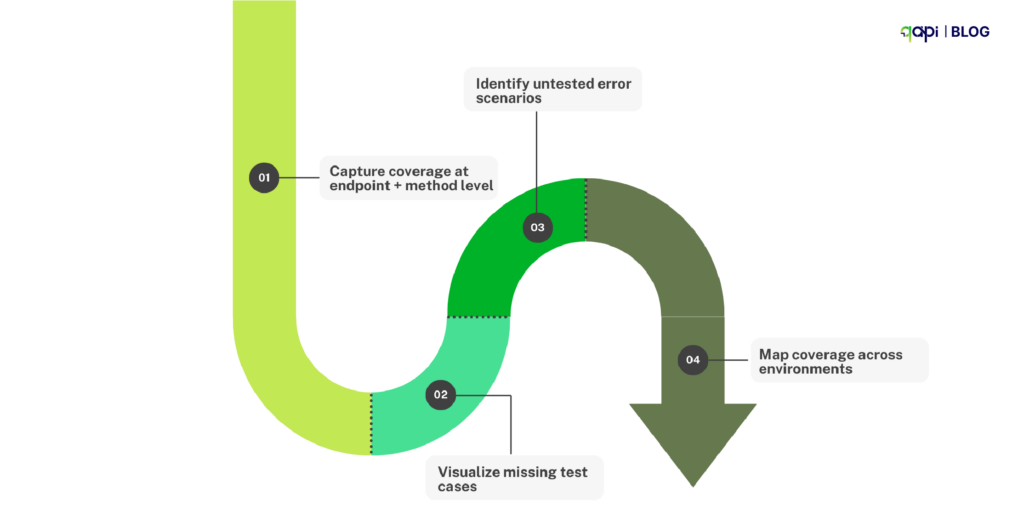

The third issue is dangerous coverage gaps. While happy-path scenarios are usually well-tested, critical areas such as error handling, edge cases, security checks, and performance under load remain insufficiently covered.

This happens because maintenance burdens crowd out the creation of new tests, and it is difficult to safely simulate complex real-world conditions. Consequently, bugs and vulnerabilities often go undetected until they reach production.

We’re just hitting the major concerns while the list goes on and on.

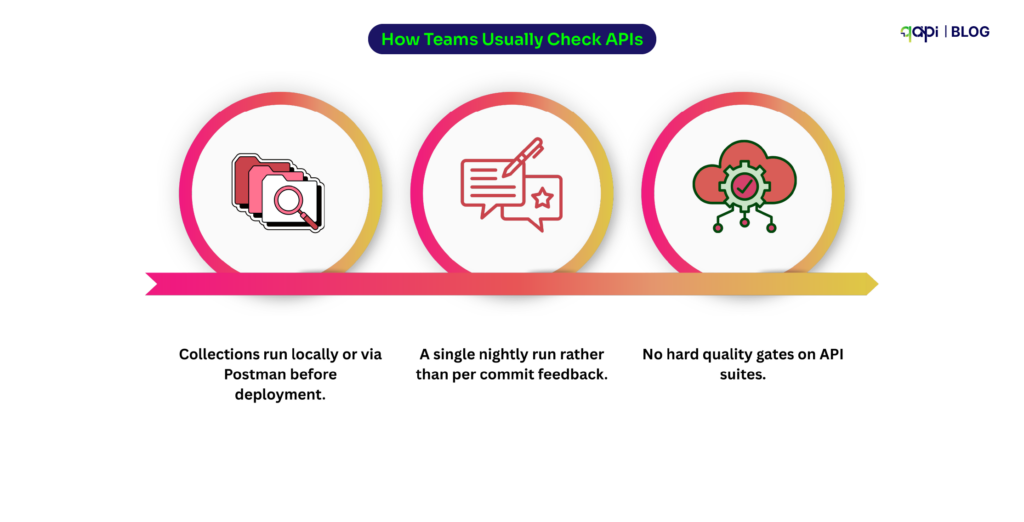

As API testing is often still a semimanual checkpoint near the end of a release:

• Collections run locally or via Postman before deployment.

• A single nightly run rather than per commit feedback.

• No hard quality gates on API suites.

Often led to the said problems and more

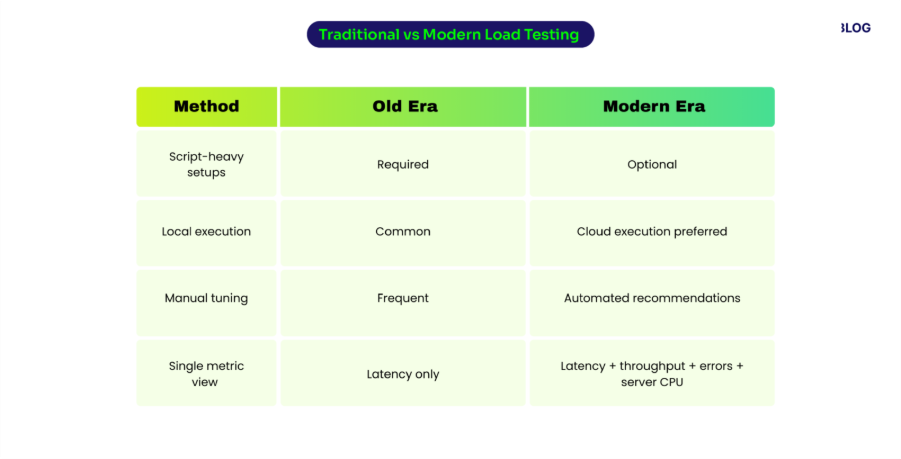

Little or no performance/load testing

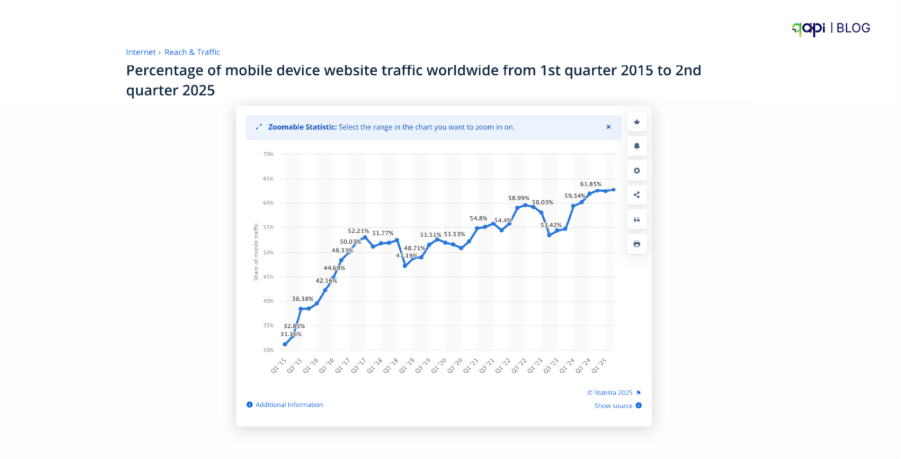

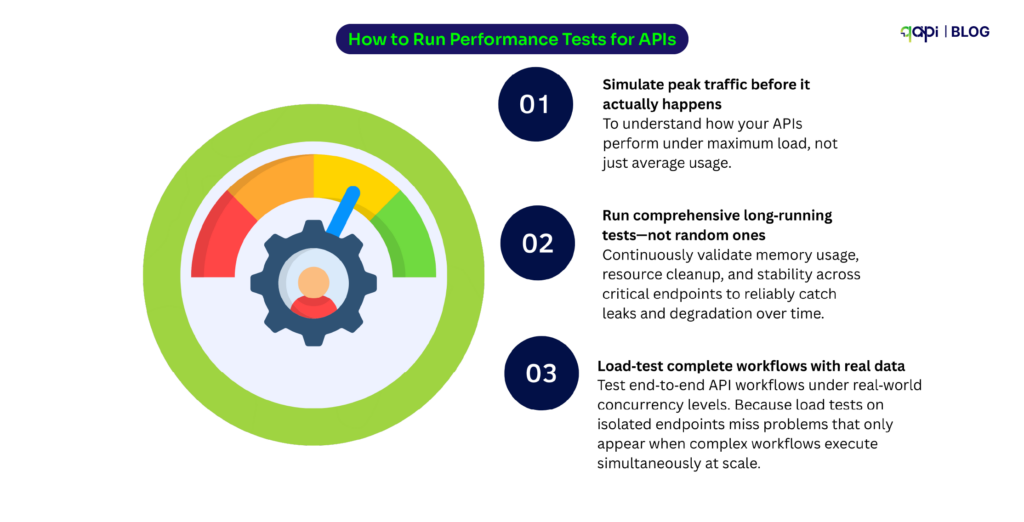

While functional tests answer, “does it work?” and performance tests answer, “will it still work when it matters?” Many teams never systematically test and just pick one in random:

• Assume a peak traffic simulation before a major sale.

• You randomly pick a long-running test to check for obvious memory leaks and miss the rest.

• A partial load test on a complex workflow, without realistic concurrency.

The result? You have results and you have a new set of problems.

And the worst part is, you don’t know how to connect the dots, because data is cluttered.

How Microservices Multiply the Problem

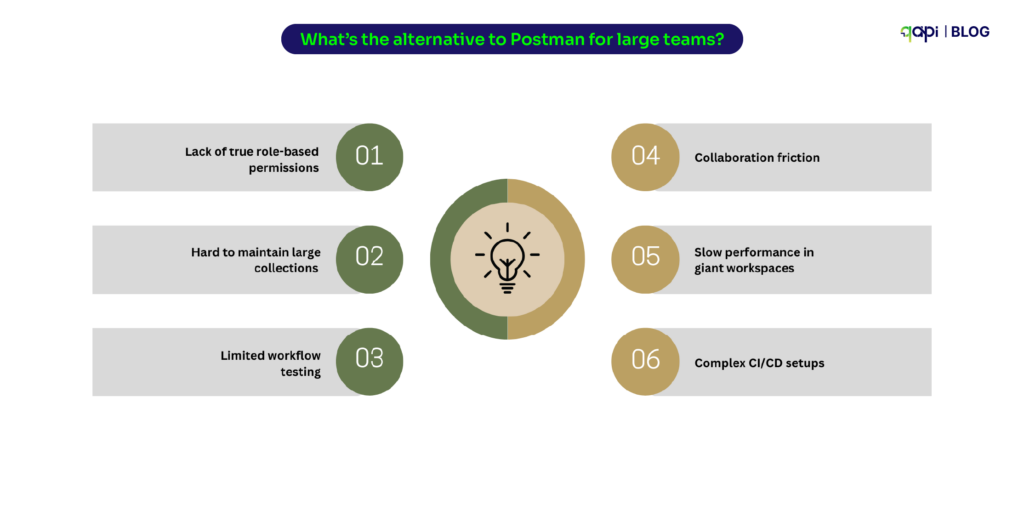

Microservices architecture multiplies testing complexity because multiple teams start building services independently. This leads to variation in coding standards, testing procedures, and tooling.

This further affects the environment configuration drift between dev, test, and production creating hard-to-debug issues.

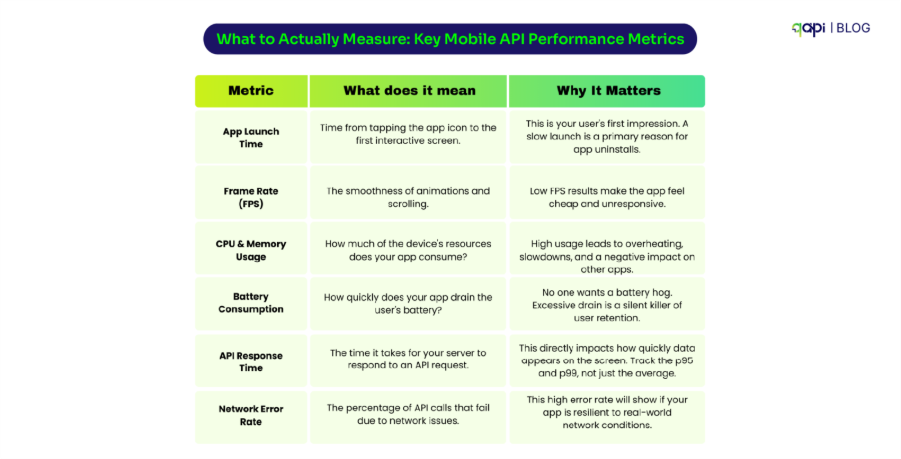

Next, performance testing becomes distributed – QA teams are now forced to verify individual service functions and smooth inter-service communication.

Test interdependencies grow – one service’s failure leads to across integration tests.

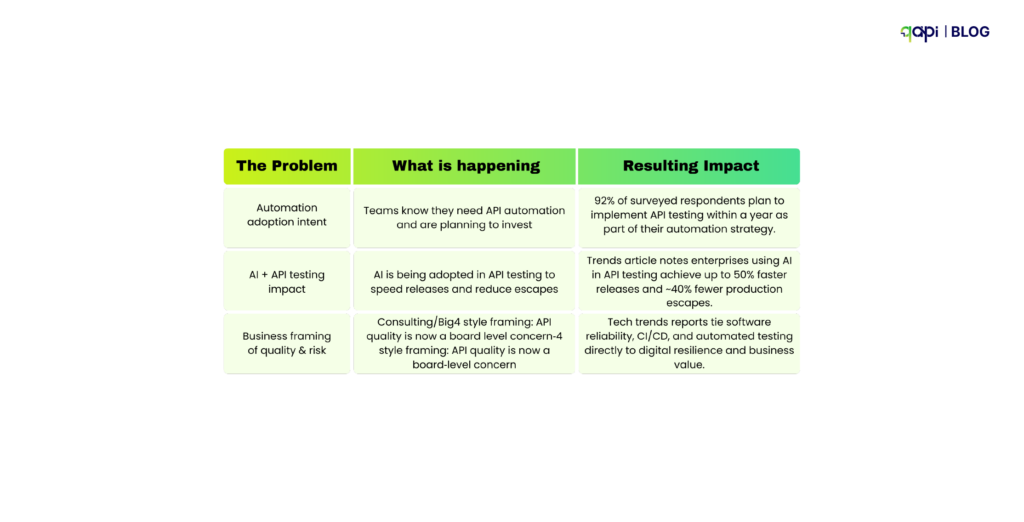

This is why API performance issues are so hard to diagnose in microservices environments—and why many teams delay addressing them altogether. In fact, 43% of enterprises report postponing API testing initiatives due to insufficient technical capability, not lack of intent.

Adoption Gaps: The Skills & Coverage Problem

Despite criticality, 91% of developers and testers say API testing is critical, yet 50% lack the tools and processes to effectively automate it. The adoption landscape reveals:

How to simplify and eliminate these problems

My take. You need to reduce and simplify.

After all, like me, you’ve been on the internet and in some cases for a longer period than me. Add your years of reading, YouTube, podcasts, and even Reddit scrolls, and you would have consumed enough to know what you’ve just been convinced enough that this is the way it has to be.

So, you’d want to start by reflecting on what is killing your teams.

Time? Budget? Complexity? Context switching? And you’ve seen the end result it has.

You already have somewhat a clear idea of what needs to go, now you need to see what a good replacement can be.

You should start with a simplified API testing tool.

Read, apply, examine why it works or why it does not

You don’t want to just jump into a tool. You want to understand why they’re good. Or why they’re not good. You want to be able to explain your choice in specific details.

That’s why we’re running a free trial for all the new users and enterprises.

There’s no way around it. You have to make a lot of strategic decisions (some good, some bad, but mostly wise) before your need becomes obsolete.

Every time you do, every time you analyze your work, you’re practicing and building a system that will help you and your team immensely.

Judgment only improves with volume. You want to do run 100 tests. Write 100 test cases. Generate 100 reports. Edit endpoints 100 times on the dashboard.

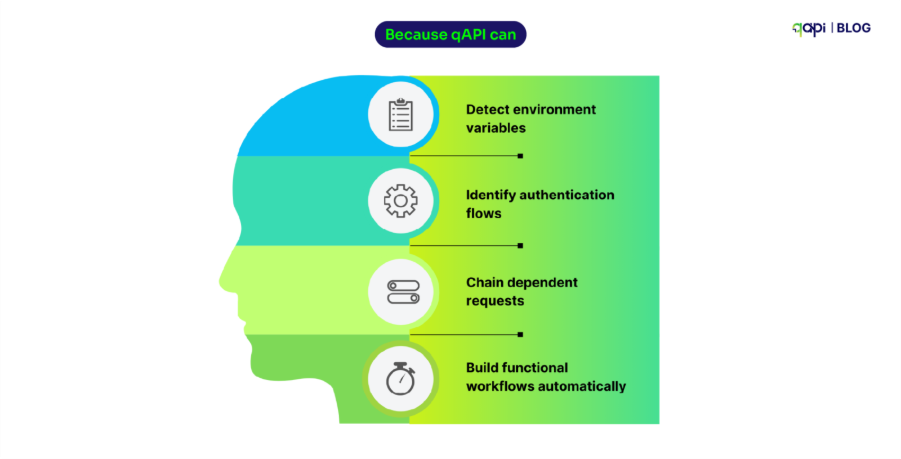

And when AI does most of the legwork, running an extra 160 tests doesn’t feel like a thing, and you feel like you’re only getting started. That’s what qAPI does for you.

Final thoughts

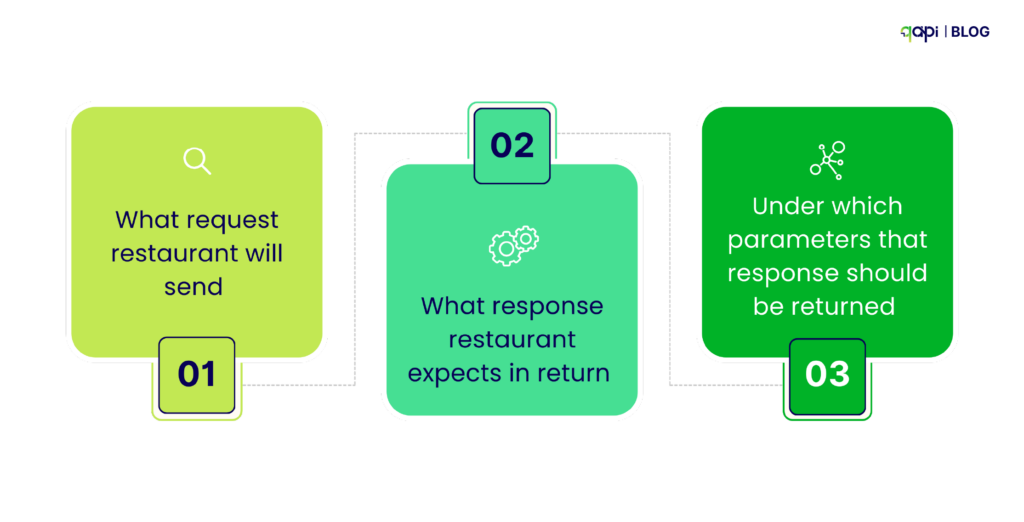

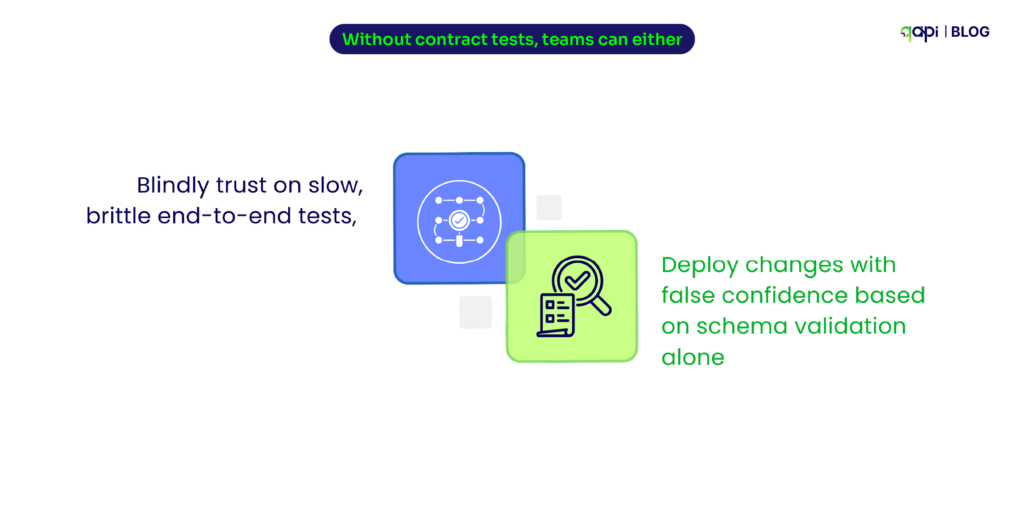

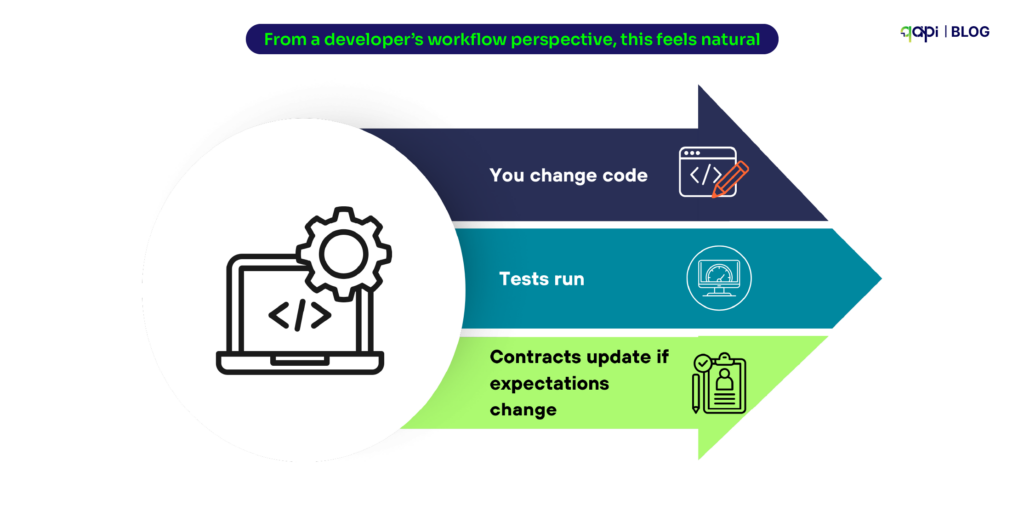

Industry data shows that formal API contract testing adoption remains low. The reason is not awareness—it’s friction. Today contract testing requires additional frameworks, cross-team coordination, and ongoing maintenance.

In a world where everyone can create, this creates an adoption barrier that rarely clears.

qAPI embeds contract validation directly into everyday API testing through schema validation. This removes the need for parallel tooling and allows teams to detect breaking changes early, without increasing operational complexity or requiring specialized DevOps investment.

The companies that will win are the once that slow down, make the change and move on.

Reduce Noise in Engineering Pipelines

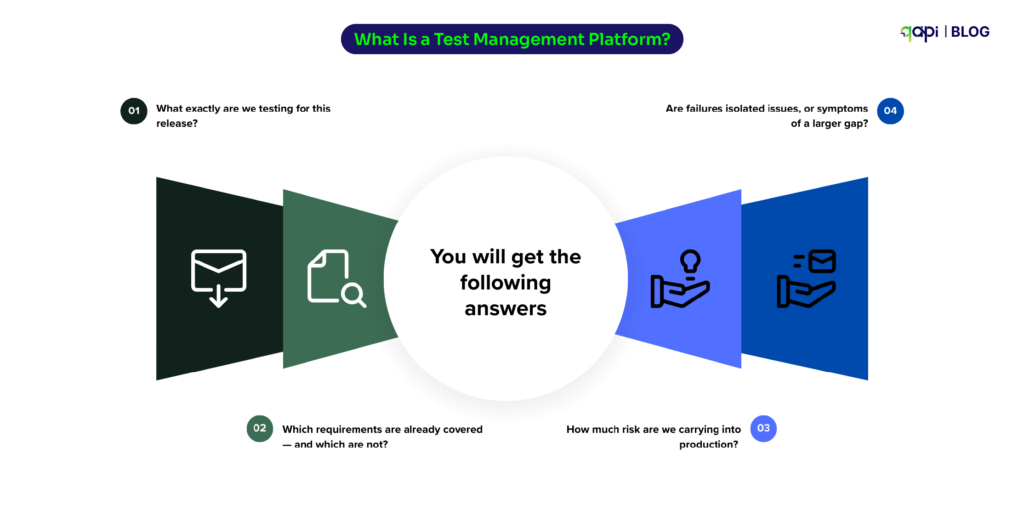

One of the most common complaints from engineering teams is that automated testing produces too much acceptable content that we usually forget. Tests pass, fail, and rerun—yet real production issues still escape.

The root cause that I see is a small testing focus. Many tools validate endpoints in isolation, missing failures that only appear across business workflows.

qAPI shifts testing from endpoint verification to end-to-end API workflows. It puts the work by aligning test coverage with how systems actually operate. This improves signal quality and allows engineering teams to trust test results as a basis for release decisions.

Address the Test Maintenance Costs

At scale, test automation often becomes a cost centre. Enterprises routinely spend close 60% or more of QA capacity maintaining existing tests rather than improving quality.

For you and your team this means:

• Slower release cycles

• Increasing QA headcount without proportional gains

• Growing frustration across engineering teams

qAPI reduces maintenance effort by eliminating script-heavy test design and relying on schemas and flows that naturally evolve with the system. This doesn’t eliminate maintenance—but it meaningfully reduces it, allowing QA capacity to shift toward coverage, performance, and risk mitigation.

The ROI comes from smart allocation of effort, not from cost-cutting.

Stabilize your CI/CD as a Governance Mechanism

CI/CD pipelines are often framed as productivity tools, but at the top level, they are looked as governance mechanisms. When pipelines are unreliable, teams bypass controls, and quality reduces drastically.

qAPI improves pipeline reliability by producing deterministic results tied to contracts and flows rather than fragile assertions. For leadership, this means pipelines regain their role as trusted quality gates, enabling faster decision-making without compromising standards.

qAPI provides a combined view of API interactions across services, enabling teams to see dependencies, execution paths, and failure propagation. This visibility supports better architectural decisions and reduces dependence on old data.

By applying intelligence in adaptive ways—simplifying test creation, highlighting impactful changes, and improving failure analysis—without affecting system behavior or removing human oversight, qAPI keeps you in complete control and free of efforts.